环境说明操作系统:CentOS7.4 64bit 软件版本:kubernetes-v1.9.6、etcd-v3.2.18、flannel-v0.10.0 下载地址:https://dl.k8s.io/v1.9.6/kubernetes-server-linux-amd64.tar.gz https://dl.k8s.io/v1.9.6/kubernetes-node-linux-amd64.tar.gz https://github.com/coreos/etcd/releases/download/v3.2.18/etcd-v3.2.18-linux-amd64.tar.gz https://github.com/coreos/flannel/releases/download/v0.10.0/flannel-v0.10.0-linux-amd64.tar.gz

证书生成kubernetes系统的各组件需要使用 TLS 证书对通信进行加密,本文档使用CloudFlare的 PKI 工具集 cfssl 来生成 Certificate Authority (CA) 和其它证书; 生成的 CA 证书和秘钥文件如下:

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/local/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/local/bin/cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/{cfssl,cfssljson,cfssl-certinfo}

mkdir -p /etc/kubernetes/ssl注意:之后证书相关目录都在/etc/kubernetes/ssl 在Master节点上创建cfssl所需的json文件: admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "system:masters",

"OU": "System"

}

]

}

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "k8s",

"OU": "System"

}

]

}

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "k8s",

"OU": "System"

}

]

}

for ID in `seq 2 254`; do echo \ \ \ \ \"10.0.0.$ID\",; done172.8.0.1为内部services api地址;172.8.0.2为内部services dns地址 172.8.0.0/24网段也可预留多个地址

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"172.8.0.1",

"172.8.0.2",

"10.0.0.2",

"10.0.0.3",

"10.0.0.4",

"10.0.0.5",

...

"10.0.0.254",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "k8s",

"OU": "System"

}

]

}

cd /etc/kubernetes/ssl cfssl gencert -initca k8s-root-ca-csr.json | cfssljson -bare k8s-root-ca cd /etc/kubernetes/ssl for targetName in kubernetes admin kube-proxy; do cfssl gencert --ca k8s-root-ca.pem --ca-key k8s-root-ca-key.pem --config k8s-gencert.json --profile kubernetes $targetName-csr.json | cfssljson --bare $targetName; done scp *.pem 10.0.0.3:/etc/kubernetes/ssl scp *.pem 10.0.0.4:/etc/kubernetes/ssl scp *.pem 10.0.0.5:/etc/kubernetes/ssl etcd集群搭建hosts绑定,/etc/hosts10.0.0.3 etcd01 10.0.0.4 etcd02 10.0.0.5 etcd03 mkdir -p /data/etcd10.0.0.3:/usr/lib/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/data/etcd/ EnvironmentFile=-/etc/kubernetes/etcd.conf ExecStart=/usr/local/bin/etcd \ --name=etcd01 \ --cert-file=/etc/kubernetes/ssl/kubernetes.pem \ --key-file=/etc/kubernetes/ssl/kubernetes-key.pem \ --peer-cert-file=/etc/kubernetes/ssl/kubernetes.pem \ --peer-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \ --trusted-ca-file=/etc/kubernetes/ssl/k8s-root-ca.pem \ --peer-trusted-ca-file=/etc/kubernetes/ssl/k8s-root-ca.pem \ --initial-advertise-peer-urls=https://10.0.0.3:2380 \ --listen-peer-urls=https://10.0.0.3:2380 \ --listen-client-urls=https://10.0.0.3:2379,http://127.0.0.1:2379 \ --advertise-client-urls=https://10.0.0.3:2379 \ --initial-cluster-token=etcd-cluster-0 \ --initial-cluster=etcd01=https://10.0.0.3:2380,etcd02=https://10.0.0.4:2380,etcd03=https://10.0.0.5:2380 \ --initial-cluster-state=new \ --data-dir=/data/etcd Restart=on-failure RestartSec=5 [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/data/etcd/ EnvironmentFile=-/etc/kubernetes/etcd.conf ExecStart=/usr/local/bin/etcd \ --name=etcd02 \ --cert-file=/etc/kubernetes/ssl/kubernetes.pem \ --key-file=/etc/kubernetes/ssl/kubernetes-key.pem \ --peer-cert-file=/etc/kubernetes/ssl/kubernetes.pem \ --peer-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \ --trusted-ca-file=/etc/kubernetes/ssl/k8s-root-ca.pem \ --peer-trusted-ca-file=/etc/kubernetes/ssl/k8s-root-ca.pem \ --initial-advertise-peer-urls=https://10.0.0.4:2380 \ --listen-peer-urls=https://10.0.0.4:2380 \ --listen-client-urls=https://10.0.0.4:2379,http://127.0.0.1:2379 \ --advertise-client-urls=https://10.0.0.4:2379 \ --initial-cluster-token=etcd-cluster-0 \ --initial-cluster=etcd01=https://10.0.0.3:2380,etcd02=https://10.0.0.4:2380,etcd03=https://10.0.0.5:2380 \ --initial-cluster-state=new \ --data-dir=/data/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target10.0.0.5:/usr/lib/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify WorkingDirectory=/data/etcd/ EnvironmentFile=-/etc/kubernetes/etcd.conf ExecStart=/usr/local/bin/etcd \ --name=etcd03 \ --cert-file=/etc/kubernetes/ssl/kubernetes.pem \ --key-file=/etc/kubernetes/ssl/kubernetes-key.pem \ --peer-cert-file=/etc/kubernetes/ssl/kubernetes.pem \ --peer-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \ --trusted-ca-file=/etc/kubernetes/ssl/k8s-root-ca.pem \ --peer-trusted-ca-file=/etc/kubernetes/ssl/k8s-root-ca.pem \ --initial-advertise-peer-urls=https://10.0.0.5:2380 \ --listen-peer-urls=https://10.0.0.5:2380 \ --listen-client-urls=https://10.0.0.5:2379,http://127.0.0.1:2379 \ --advertise-client-urls=https://10.0.0.5:2379 \ --initial-cluster-token=etcd-cluster-0 \ --initial-cluster=etcd01=https://10.0.0.3:2380,etcd02=https://10.0.0.4:2380,etcd03=https://10.0.0.5:2380 \ --initial-cluster-state=new \ --data-dir=/data/etcd Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target三台服务器分别启动命令 systemctl enable etcd systemctl start etcd etcdctl --ca-file=/etc/kubernetes/ssl/k8s-root-ca.pem \ --cert-file=/etc/kubernetes/ssl/kubernetes.pem \ --key-file=/etc/kubernetes/ssl/kubernetes-key.pem \ cluster-health显示cluster is healthy即etcd集群正常 flannel网络配置将flannel-v0.10.0-linux-amd64.tar.gz解压到4台(即所有节点,包括Master、Node)服务器/usr/local/bin/{flanneld,mk-docker-opts.sh} flanneld配置文件/etc/kubernetes/flannel# Flanneld configuration options # etcd url location. Point this to the server where etcd runs ETCD_ENDPOINTS="https://10.0.0.3:2379,https://10.0.0.4:2379,https://10.0.0.5:2379" # etcd config key. This is the configuration key that flannel queries # For address range assignment ETCD_PREFIX="/kubernetes/network" # Any additional options that you want to pass FLANNEL_OPTIONS="-etcd-cafile=/etc/kubernetes/ssl/k8s-root-ca.pem -etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem -etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem -iface=eth0"flanneld启动脚本/usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/etc/kubernetes/flannel

EnvironmentFile=-/etc/kubernetes/docker-network

ExecStart=/usr/local/bin/flanneld \

-etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-prefix=${ETCD_PREFIX} \

$FLANNEL_OPTIONS

ExecStartPost=/usr/local/bin/mk-docker-opts.sh -d /run/flannel/docker

Restart=on-failure

[Install]

WantedBy=multi-user.target

RequiredBy=docker.service

在etcd中创建网络配置

etcdctl --endpoints=https://10.0.0.3:2379,https://10.0.0.4:2379,https://10.0.0.5:2379 \

--ca-file=/etc/kubernetes/ssl/k8s-root-ca.pem \

--cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

mkdir /kubernetes/network

etcdctl --endpoints=https://10.0.0.3:2379,https://10.0.0.4:2379,https://10.0.0.5:2379 \

--ca-file=/etc/kubernetes/ssl/k8s-root-ca.pem \

--cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

mk /kubernetes/network/config '{"Network":"172.7.0.0/16","SubnetLen":24,"Backend":{ "Type": "vxlan", "VNI": 1 }}'

flannel启动命令

systemctl enable flanneld systemctl start flanneld etcdctl --endpoints=https://10.0.0.3:2379,https://10.0.0.4:2379,https://10.0.0.5:2379 \ --ca-file=/etc/kubernetes/ssl/k8s-root-ca.pem \ --cert-file=/etc/kubernetes/ssl/kubernetes.pem \ --key-file=/etc/kubernetes/ssl/kubernetes-key.pem \ get /kubernetes/network/config etcdctl --endpoints=https://10.0.0.3:2379,https://10.0.0.4:2379,https://10.0.0.5:2379 \ --ca-file=/etc/kubernetes/ssl/k8s-root-ca.pem \ --cert-file=/etc/kubernetes/ssl/kubernetes.pem \ --key-file=/etc/kubernetes/ssl/kubernetes-key.pem \ ls /kubernetes/network/subnets docker-ce安装注意Node节点安装docker,hub.linuxeye.com为内网私有参考,建议使用vmware harhorwget http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo yum -y install docker-ce [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify # the default is not to use systemd for cgroups because the delegate issues still # exists and systemd currently does not support the cgroup feature set required # for containers run by docker EnvironmentFile=-/run/flannel/docker ExecStart=/usr/bin/dockerd --insecure-registry hub.linuxeye.com --data-root=/data/docker --log-opt max-size=1024m --log-opt max-file=10 $DOCKER_OPTS ExecStartPost=/sbin/iptables -I FORWARD -s 0.0.0.0/0 -j ACCEPT ExecReload=/bin/kill -s HUP $MAINPID # Having non-zero Limit*s causes performance problems due to accounting overhead # in the kernel. We recommend using cgroups to do container-local accounting. LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity # Uncomment TasksMax if your systemd version supports it. # Only systemd 226 and above support this version. #TasksMax=infinity TimeoutStartSec=0 # set delegate yes so that systemd does not reset the cgroups of docker containers Delegate=yes # kill only the docker process, not all processes in the cgroup KillMode=process # restart the docker process if it exits prematurely Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target修改docker.service后执行 systemctl daemon-reload systemctl start docker注意:docker在flannel之后启动 Kubeconfig生成在10.0.0.2 Master节点上将kubernetes-server-linux-amd64.tar.gz解压中文件放到/usr/local/bin kubeconfig文件记录k8s集群的各种信息,对集群构建非常重要。

export KUBE_APISERVER="https://10.0.0.2:6443"设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/k8s-root-ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER}

kubectl config set-credentials admin \ --client-certificate=/etc/kubernetes/ssl/admin.pem \ --embed-certs=true \ --client-key=/etc/kubernetes/ssl/admin-key.pem设置上下文参数 kubectl config set-context kubernetes \ --cluster=kubernetes \ --user=admin kubectl config use-context kubernetesKubelet kubeconfig 声明kube apiserver export KUBE_APISERVER="https://10.0.0.2:6443"设置集群参数

cd /etc/kubernetes

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/k8s-root-ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

设置客户端认证参数

cd /etc/kubernetes

export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

cd /etc/kubernetes kubectl config set-context default \ --cluster=kubernetes \ --user=kubelet-bootstrap \ --kubeconfig=bootstrap.kubeconfig设置默认上下文 cd /etc/kubernetes kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

cd /etc/kubernetes

cat > token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

声明kube apiserver

export KUBE_APISERVER="https://10.0.0.2:6443"设置集群参数

cd /etc/kubernetes

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/k8s-root-ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

cd /etc/kubernetes kubectl config set-credentials kube-proxy \ --client-certificate=/etc/kubernetes/ssl/kube-proxy.pem \ --client-key=/etc/kubernetes/ssl/kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=kube-proxy.kubeconfig cd /etc/kubernetes kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig scp ~/.kube/config 10.0.0.3:/etc/kubernetes/kubeconfig scp /etc/kubernetes/bootstrap.kubeconfig 10.0.0.3:/etc/kubernetes/bootstrap.kubeconfig scp /etc/kubernetes/kube-proxy.kubeconfig 10.0.0.3:/etc/kubernetes/kube-proxy.kubeconfig scp ~/.kube/config 10.0.0.4:/etc/kubernetes/kubeconfig scp /etc/kubernetes/bootstrap.kubeconfig 10.0.0.4:/etc/kubernetes/bootstrap.kubeconfig scp /etc/kubernetes/kube-proxy.kubeconfig 10.0.0.4:/etc/kubernetes/kube-proxy.kubeconfig scp ~/.kube/config 10.0.0.5:/etc/kubernetes/kubeconfig scp /etc/kubernetes/bootstrap.kubeconfig 10.0.0.5:/etc/kubernetes/bootstrap.kubeconfig scp /etc/kubernetes/kube-proxy.kubeconfig 10.0.0.5:/etc/kubernetes/kube-proxy.kubeconfig Master搭建通用配置文件/etc/kubernetes/config### # kubernetes system config # # The following values are used to configure various aspects of all # kubernetes services, including # # kube-apiserver.service # kube-controller-manager.service # kube-scheduler.service # kubelet.service # kube-proxy.service # logging to stderr means we get it in the systemd journal KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, 0 is debug KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers KUBE_ALLOW_PRIV="--allow-privileged=true" # How the controller-manager, scheduler, and proxy find the apiserver KUBE_MASTER="--master=http://10.0.0.2:8080"kube apiserver配置文件/etc/kubernetes/kube-apiserver

###

## kubernetes system config

##

## The following values are used to configure the kube-apiserver

##

#

## The address on the local server to listen to.

KUBE_API_ADDRESS="--advertise-address=10.0.0.2 --bind-address=10.0.0.2 --insecure-bind-address=10.0.0.2"

#

## The port on the local server to listen on.

#KUBE_API_PORT="--port=8080"

#

## Port minions listen on

#KUBELET_PORT="--kubelet-port=10250"

#

## Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=https://10.0.0.3:2379,https://10.0.0.4:2379,https://10.0.0.5:2379"

#

## Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=172.8.0.0/16"

#

## default admission control policies

#KUBE_ADMISSION_CONTROL="--admission-control=ServiceAccount,NamespaceLifecycle,NamespaceExists,LimitRanger,ResourceQuota"

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota,NodeRestriction"

#

## Add your own!

KUBE_API_ARGS=" --enable-bootstrap-token-auth \

--authorization-mode=RBAC,Node \

--runtime-config=rbac.authorization.k8s.io/v1 \

--kubelet-https=true \

--service-node-port-range=30000-65000 \

--token-auth-file=/etc/kubernetes/token.csv \

--tls-cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

--client-ca-file=/etc/kubernetes/ssl/k8s-root-ca.pem \

--service-account-key-file=/etc/kubernetes/ssl/k8s-root-ca-key.pem \

--etcd-cafile=/etc/kubernetes/ssl/k8s-root-ca.pem \

--etcd-certfile=/etc/kubernetes/ssl/kubernetes.pem \

--etcd-keyfile=/etc/kubernetes/ssl/kubernetes-key.pem \

--enable-swagger-ui=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/lib/audit.log \

--event-ttl=1h"

kube apiserver启动脚本/usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Service

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

After=etcd.service

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/kube-apiserver

ExecStart=/usr/local/bin/kube-apiserver \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_ETCD_SERVERS \

$KUBE_API_ADDRESS \

$KUBE_API_PORT \

$KUBELET_PORT \

$KUBE_ALLOW_PRIV \

$KUBE_SERVICE_ADDRESSES \

$KUBE_ADMISSION_CONTROL \

$KUBE_API_ARGS

Restart=on-failure

RestartSec=15

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

kube-controller-manager配置文件/etc/kubernetes/kube-controller-manager

###

# The following values are used to configure the kubernetes controller-manager

# defaults from config and apiserver should be adequate

# Add your own!

KUBE_CONTROLLER_MANAGER_ARGS="--address=10.0.0.2 \

--service-cluster-ip-range=172.8.0.0/16 \

--cluster-cidr=172.7.0.0/16 \

--allocate-node-cidrs=true \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/ssl/k8s-root-ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/k8s-root-ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/k8s-root-ca-key.pem \

--root-ca-file=/etc/kubernetes/ssl/k8s-root-ca.pem \

--leader-elect=true"

kube-controller-manager启动脚本/usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/kube-controller-manager

ExecStart=/usr/local/bin/kube-controller-manager \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_CONTROLLER_MANAGER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

kube-scheduler配置文件/etc/kubernetes/kube-scheduler

###

# kubernetes scheduler config

# default config should be adequate

# Add your own!

KUBE_SCHEDULER_ARGS="--leader-elect=true \

--address=10.0.0.2"

kube-scheduler启动脚本/usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler Plugin

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/kube-scheduler

ExecStart=/usr/local/bin/kube-scheduler \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_SCHEDULER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

启用服务

systemctl enable kube-apiserver systemctl enable kube-controller-manager systemctl enable kube-scheduler systemctl start kube-apiserver systemctl start kube-controller-manager systemctl start kube-scheduler Node搭建kubelet 创建bootstrap 角色及绑定(10.0.0.2 Master上执行)kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrap注意:之后操作在所有Node节点执行 通用配置文件/etc/kubernetes/config ### # kubernetes system config # # The following values are used to configure various aspects of all # kubernetes services, including # # kube-apiserver.service # kube-controller-manager.service # kube-scheduler.service # kubelet.service # kube-proxy.service # logging to stderr means we get it in the systemd journal KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, 0 is debug KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers KUBE_ALLOW_PRIV="--allow-privileged=true" # How the controller-manager, scheduler, and proxy find the apiserver KUBE_MASTER="--master=http://10.0.0.2:8080"关闭SWAP swapoff -a #不关闭kubelet启动会报错创建存放kubelet数据目录 mkdir -p /data/kubeletkubelet配置文件/etc/kubernetes/kubelet

###

## kubernetes kubelet (minion) config

#

## The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=10.0.0.3"

#

## The port for the info server to serve on

#

## You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=10.0.0.3"

#

## pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=hub.linuxeye.com/rhel7/pod-infrastructure:latest"

#

## Add your own!

KUBELET_ARGS="--cluster-dns=172.8.0.2 \

--experimental-bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \

--kubeconfig=/etc/kubernetes/kubeconfig \

--require-kubeconfig \

--cert-dir=/etc/kubernetes/ssl \

--cluster-domain=cluster.local. \

--hairpin-mode promiscuous-bridge \

--serialize-image-pulls=false \

--container-runtime=docker \

--register-node \

--tls-cert-file=/etc/kubernetes/ssl/k8s-root-ca.pem \

--tls-private-key-file=/etc/kubernetes/ssl/k8s-root-ca-key.pem \

--root-dir=/data/kubelet"

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/kubelet

ExecStart=/usr/local/binkubelet \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBELET_API_SERVER \

$KUBELET_ADDRESS \

$KUBELET_PORT \

$KUBELET_HOSTNAME \

$KUBE_ALLOW_PRIV \

$KUBELET_POD_INFRA_CONTAINER \

$KUBELET_ARGS

Restart=on-failure

[Install]

WantedBy=multi-user.target

kubelet启动命令

systemctl enable kubelet systemctl start kubeletkube-proxy 安装依赖包 yum install -y conntrack-toolskube-proxy配置文件/etc/kubernetes/proxy

###

# kubernetes proxy config

# default config should be adequate

# Add your own!

KUBE_PROXY_ARGS="--bind-address=10.52.7.56 \

--hostname-override=10.52.7.56 \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig \

--cluster-cidr=172.7.0.0/16"

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/proxy

ExecStart=/usr/local/bin/kube-proxy \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_PROXY_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

kube-proxy启动命令

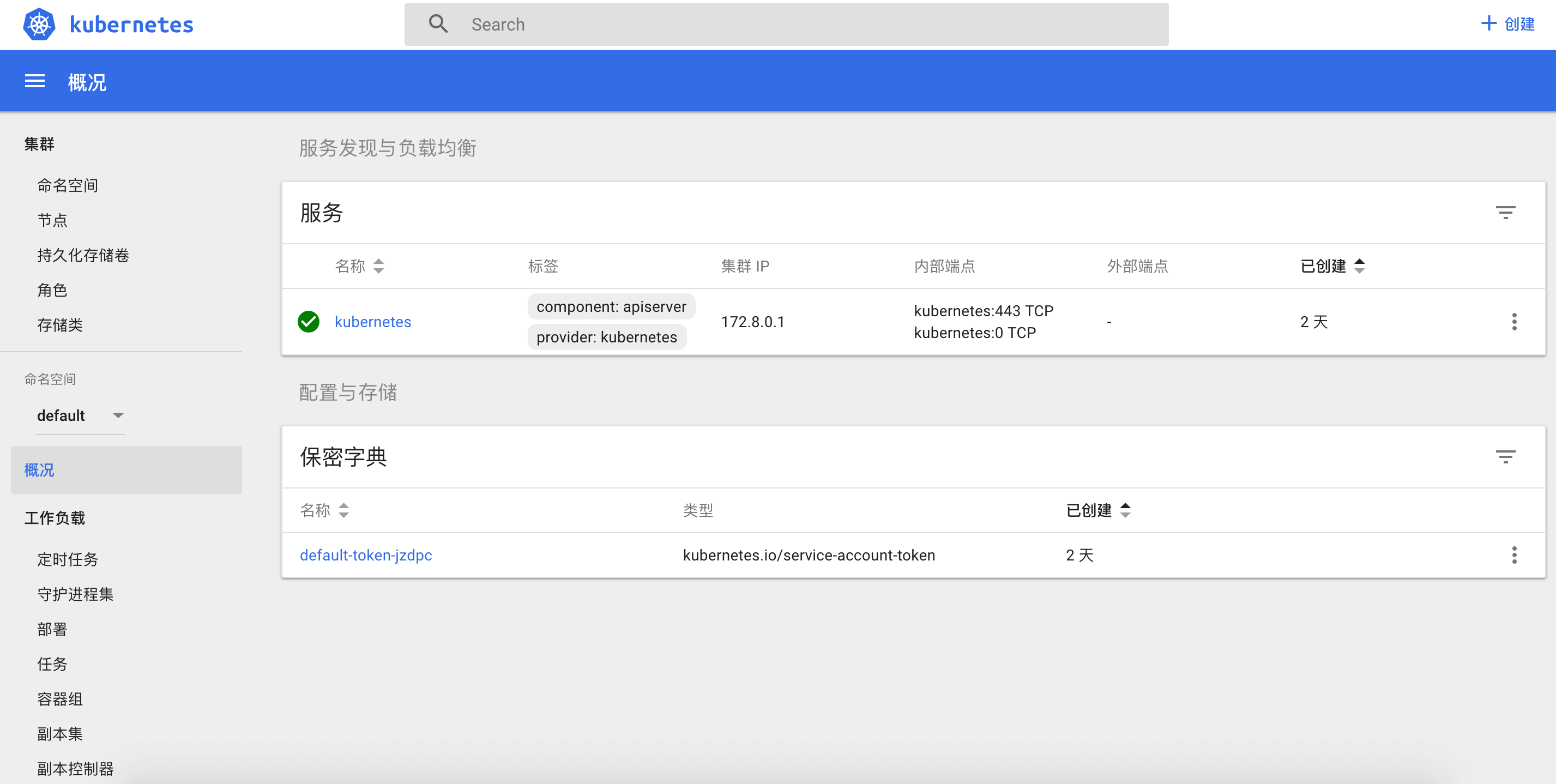

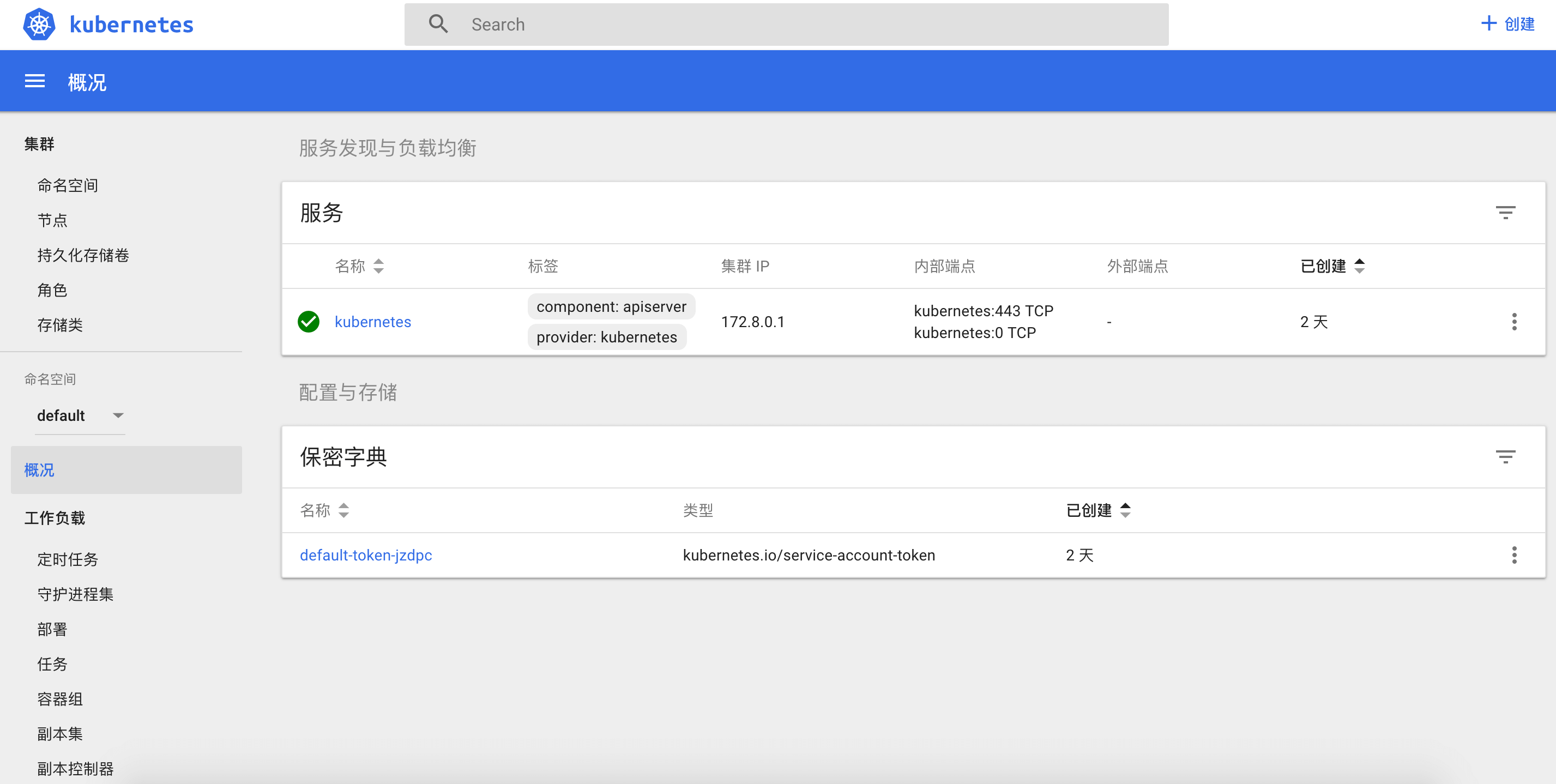

systemctl enable kube-proxy systemctl start kube-proxy验证Node是否正常 在10.0.0.2 Master上运行,状态为Ready即正常,否则请检查整个安装过程 kubectl get nodes NAME STATUS ROLES AGE VERSION 10.0.0.0.2 Ready <none> 1m v1.9.6 DashBoard搭建请在10.0.0.2 Master节点操作,yaml文件参考:https://github.com/kubernetes/kube-state-metrics/tree/master/kubernetes kube-state-metrics.yamlkubectl apply -f kube-state-metrics.yamlkube-state-metrics-deploy.yaml kubectl apply -f kube-state-metrics-deploy.yamldashboard yaml文件可参考: https://github.com/kubernetes/dashboard/blob/master/src/deploy/recommended/kubernetes-dashboard.yaml 特别注意cert证书、docker image地址,以下yaml是去掉证书部分。 dashboard-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: dashboard

subjects:

- kind: ServiceAccount

name: dashboard

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 80

targetPort: 9090

dashboard-controller.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

serviceAccountName: dashboard

containers:

- name: kubernetes-dashboard

image: hub.linuxeye.com/google_containers/kubernetes-dashboard-amd64:v1.8.3

resources:

limits:

cpu: 1000m

memory: 2000Mi

requests:

cpu: 1000m

memory: 2000Mi

ports:

- containerPort: 9090

livenessProbe:

httpGet:

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

应用dashboard yaml文件

kubectl apply -f dashboard-rbac.yaml kubectl apply -f dashboard-service.yaml kubectl apply -f dashboard-controller.yaml验证dashboard运行状况 kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE kube-state-metrics-7859f12bfb-k22m9 2/2 Running 0 19h 172.7.17.2 10.0.0.2 kubernetes-dashboard-745998bbd4-n9dfd 1/1 Running 0 18h 172.7.17.3 10.0.0.2一直Running状态即正常,否则可能有问题,可用如下命令查看报错: kubectl logs kubernetes-dashboard-745998bbd4-n9dfd -n kube-system最后来一张kubernetes-dashboard UI 通过Master api proxy访问UI,即http://10.0.0.2:8080/api/v1/namespaces/kube-system/services/kubernetes-dashboard/proxy/   转载请保留固定链接: https://linuxeye.com/Linux/kubernetes.html |